10 Lessons Learned at CES 2020

Article By : Junko Yoshida

What I derived from my tour of CES 2020. Dealing with big data, vision vs. lidar, what autonomy really means, and much, much more.

LAS VEGAS — After miles of roaming the show floor, countless press briefings and one-on-one interviews, what did we learn at the CES 2020?

Anyone who survived last week’s ordeal in Las Vegas almost involuntarily comes away with a personal “takeaway” list. Below are the main perceptions I derived from my tour of CES 2020.

[Separately I recorded my interviews with executives from leading automotive semiconductor companies and aired them on our radio show, CES 2020, Day 3: IC Vendors Talk Self-Driving. Please give it a listen.]

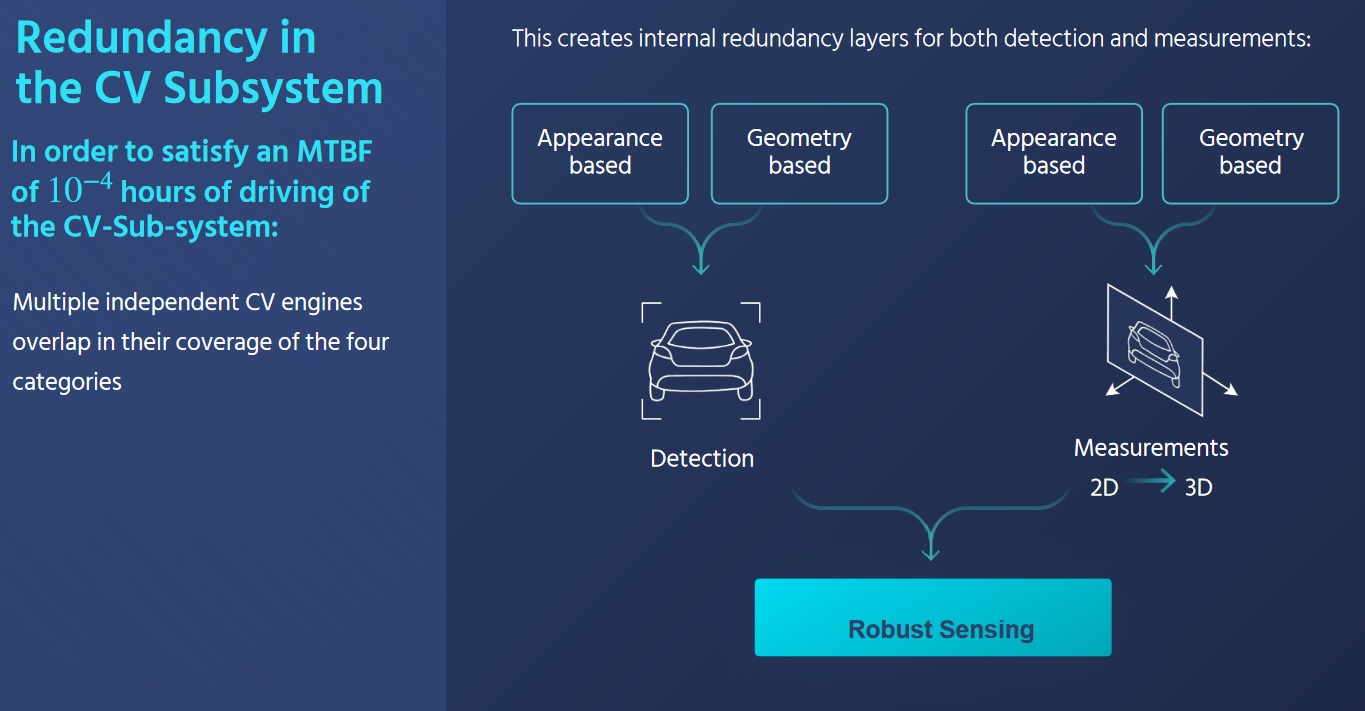

1. Camera-only AVs can create ‘internal redundancy’

Forget radars and lidars?

Experts in the automated vehicle (AV) industry maintain that redundancy — essential to the safety of AVs — comes from the use of multiple sensory modalities (i.e. vision, radar, lidar), and data fusion.

At an Intel/Mobileye press briefing at CES last week, Amnon Shashua, president and CEO of Mobileye, defied conventional wisdom. He said that by leveraging AI advancements, Mobileye now runs different neural network algorithms on multiple independent computer vision engines. This, he said, creates “internal redundancies.”

Mobileye is working on “Full-Stack Camera-only Autonomous Vehicle” (Source: Intel/Mobileye)

For example, Mobileye says it applies as many as six different algorithms to the camera-only subsystem for object detection. Separately, it runs four different algorithms to add depth to 2D images, which the company claims can effectively create 3D images without using lidars. Some of the new neural networks used by Mobileye include “Parallax Net” capable of providing accurate structure understanding, “Visual Lidar” (Vidar) offering DNN-based multi-view stereo, and “Range Net” for metric physical range estimation.

Mobileye, however, isn’t suggesting that the automotive industry do away with radars or lidars. OEMs can use a combination of lidars and radars, for example, as a separate stream, if so desired, says Shashua.

“It will be up to their customers,” said Phil Magney, founder and principal of VSI Labs. But what’s new here, Magney said, is, “the evolution of AI that can now add a certain level of redundancies to camera-only sub-systems.”

2. Filter ‘big data’

Big data is what drives connected devices. No question. But even more important is how to extract quality information out of big data. That’s where everyone struggles.

Take highly automated vehicles, for example. A processing unit powerful enough to digest incoming sensory data – whose volume gets bigger every day – won’t be cheap. A big CPU/GPU tends to dissipate too much heat. And sending big data to the cloud for AI training gets expensive. Add to this the mounting cost of annotating big data.

One way to buck the trend is to filter the data at the edge.

Teraki is a good example. The Berlin, Germany-based company claims it has developed software technology that can “adaptively resize and filter data, for more accurate object detection and machine learning.” Lidars generate “point clouds” — a collection of points that represent a 3D shape or feature, and they come with an enormous data load. What’s needed, said Teraki CEO Daniel Richart, is software that extracts information — “fast enough, at the quality they need” — before the data goes a central sensory data fusion unit inside an embedded system for transmission to the cloud.

Where Teraki sits in the ecosystem (Source: Teraki)

Paris, France-based Prophesee is another case. Its event-driven image sensors can directly address the issue of latency. Think of automatic emergency braking (AEB). Without waiting for an ADAS-equipped vehicle to finish fusing sensory data and issue a safety warning, the event-based camera can spot a road anomaly without latency. In comparison, frame-based images fused with radar data often miss the object on the road, because automakers do not want false positives to confuse drivers, explained Luca Verre, Prophesee CEO.

3. AI chips on 40nm to be fabricated in Japan

CES is not exactly a venue where AI chip startups set up booths for demos. Nonetheless, AI chip company executives were on the prowl. AI hardware vendors such as Blaize and Gyrfalcon were present in technical posters at their partners’ booths.

EE Times, however, caught up with Mythic co-founder and CEO Mike Henry in a press room. Mythic’s accelerators, based on a compute-in-memory approach, will be fabricated in Japan, by Mie Fujitsu Semiconductor.

With technologies that range from ultra-low-power and non-volatile memory to RF, Mie Fujitsu, now wholly owned by UMC, offers foundry services based on 300mm wafer production facilities. Mythic will shortly start sampling its first AI accelerator chip — integrated with a PCI express interface to connect to the host — together with its SDK, according to Henry.

In the AI race, on one end of the spectrum, companies such as Nvidia have pioneered a large AI model equipped with faster acceleration, lower latency and higher resolution. On the other end of the spectrum, chips are running a tiny machine-learning model, which, however, could suffer a huge hit on accuracy, Henry explained. “The industry is currently stuck.”

Mythic hopes that by splitting the difference it will occupy a sweet spot. It is developing in-memory AI acceleration chips powerful enough to handle HD video at 30 frames per second, while achieving low latency and low cost. System vendors need not pay for the memory bandwidth.

Mythic CEO Mike Henry

For what sort of applications will such an AI acceleration chip be used?

“This is for high quality products,” explained Henry. AI acceleration can enhance image quality, even in low light. It can even detect and read license plates. In a parking garage, it could augment security. In short, AI can take over a lot of challenging high-quality video-capturing and processing tasks that traditional cameras can’t pull off without inventing new hardware.

The key to Mythic’s compute in memory approach is a Flash memory array combined with ADCs and DACs, turned into a matrix multiply engine. On the Mythic chip Henry is holding in the picture above, 10,000 ADCs are crammed inside.

4. Selling into the future

Clearly, the initial euphoria of consumer IoT devices is over.

Most consumers think nothing of replacing smartphones or wearable devices in less than a year. In contrast, connected devices for the industrial market are a long game.

That applies equally to highly connected vehicles and Industrial IoT. As Gideon Wertheizer, CEO of CEVA, explained, many building blocks used in today’s highly automated vehicles (or industrial IoT devices) are not so different from what the industry has already developed. “The difference is whether you have the patience to stay in that [long-haul] business.”

Silicon Labs CEO Tyson Tuttle

Patience is one. Commitment is another.

Silicon Labs’ CEO Tyson Tuttle told us that, as IoT continues to look for design wins in the industrial market, the chip supplier’s job is never done at time of sale. He said, “We are selling our chips into the future.” More specifically, the success of the industrial IoT business is incumbent on chip suppliers’ commitment to support new software, protocol updates and applications. “All that over the next 10 to 15 years.”

5. Let big data companies see the forest but not trees

In the era of big data, if you are interested in the protection of your private data or increasingly concerned about future AI applications, look to Europe for help.

Unlike U.S. regulatory agencies who are hesitant to challenge big tech corporations’ “innovations,” European regulators have squarely committed protecting their citizens. Privacy and AI are their top agenda items.

The EU’s General Data Protection Regulation (GDPR) is already setting the tone not just for Europe but for the worldwide market. Global companies engaged in aggregation and data analysis are mindful that their business practices do not violate the GDPR.

At CES last week, we came across a Taiwan-based startup called DeCloak. The company’s founders succinctly described their technology as “letting big data companies see the forest without seeing any trees.”

In the burgeoning confluence of surveillance and social media, the idea of “de-cloaking” might have never occurred to Silicon Valley startups.

DeCloak has designed a privacy processing unit (PPU) on a 1x1mm chip. The PPU includes a true random number generator. Installed in a dongle connected to a smartphone, for example, the PPU would block any private data that would otherwise migrate automatically to the cloud.

DeCloak believes that de-identification is vital for medical data. Although data collectors often say they are anonymizing data, it’s unclear how much are they doing. Using the PPU, a patient can de-identify himself, to comfortably participate in the sort of medical research that depends on big data, according to DeCloak.

6. AVs with chutzpah

Mobileye showed at its press briefing a video clip shot while its autonomous vehicle was driving in Jerusalem.

Forget Hitchcock. This was one of the most gripping videos we’ve seen in a long time.

Mobileye CEO Amnon Shashua showed a two-minute clip of the Autonomous Vehicle coping with traffic impediments — including vehicles randomly parked on the street — and navigating oncoming traffic. The AV, trying to maintain a safe distance, gets boxed in but finds its way out. As it apparently intends to make a left turn in a busy intersection, the AV suddenly faces a tandem bus turning and cutting across its path from the right.

The huge bus totally occludes the AV’s view while another vehicle looms on the left. To make it worse, pedestrians are randomly crossing the road as the AV tries to escape the jam with an unprotected left turn into heavy traffic.

Throughout the video, Mobileye’s AV demonstrates a certain level of assertiveness — perhaps even chutzpah. When it finally reaches the intersection, the AV is confronted by yet another vehicle on its left. But the AV negotiates the unwieldy traffic, asserts itself and finishes the left turn. The AV got a thunderous hand from the press briefing crowd as they shared a collective sigh of relief.

How often do you get that kind of reaction from the jaded members of the media?

Just to prove that Mobileye did not conveniently show only the good part, the company released a separate video clip — 22 minutes long. This version shows the AV going through the same intersection but with less drama.

7. Why are we doing AV?

CES 2020 effectively ended a hype cycle and unrealistic expectations for the imminent arrival of fully autonomous vehicles. Robo-taxis? Maybe in a few years.

As for consumer AVs, probably not until 2030, according to Peter Schiefer, Infineon’s division president responsible for automotive.

Automotive chip companies, traditionally known for conservative views on highly automated vehicles, have gotten their voice back. In contrast, Nvidia chose not to hold a press conference this year. Without Nvidia CEO Jensen Huang to quote at CES, the media had less copy to write about the remarkable progress of AI, big SoCs integrated with GPU cores with whopping teraflops and more design wins in AV prototypes.

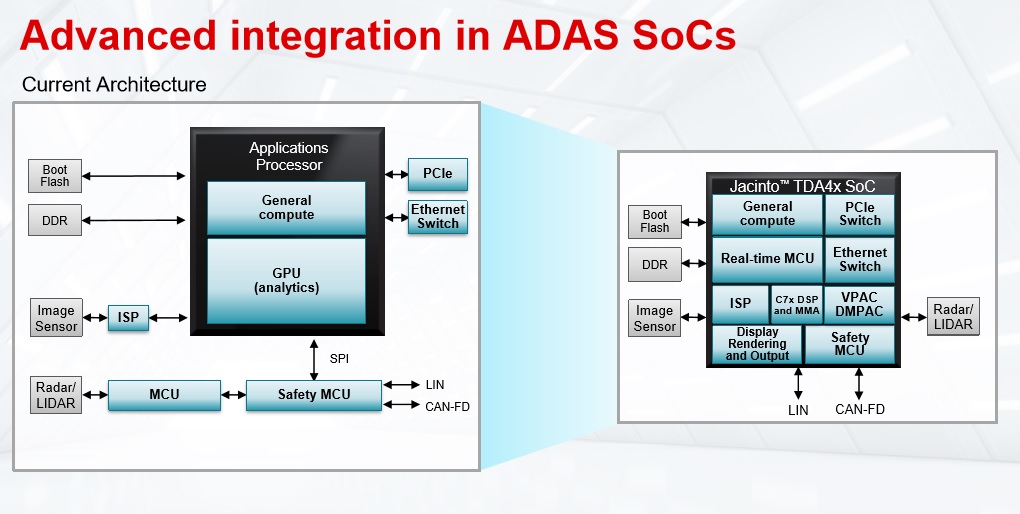

Instead, Sameer Wasson, vice president and general manager of TI’s processor business unit, took a more reflective approach. Talking about AVs he said, “We need to ask ourselves why we are doing this.”

Uber might be doing AV to replace drivers. Semiconductor companies with big processors are certainly looking for an opportunity to tackle a new computing architecture for the sake of pushing the AI envelope.

But for TI, the answer is simple: to develop practical cars enabled by more advanced safety functions.

Wasson said, “Our mission is 1) to make advanced automated features as accessible as possible to everyone, 2) to learn from the system (what automakers need) and 3) to effectively manage a whole product cycle across product lines with a scalable solution — more than 15 years.”

TI rolled out at the CES ADAS and gateway processors built on TI’s latest Jacinto platform and designed to enable mass-market ADAS vehicles.

TI’s new ADAS processor TDA4VM is not only highly integrated but also capable of fusing a variety of sensory data. (Source: TI)

8. Can cars tell us their intent?

Developers running prototype robotaxis are eager to convince consumers that AVs are safe. Intel/Mobileye discussed that its robocar provides a display to show what the AV is seeing. It allows riders to play back a few seconds of video to let them see why the AV did what it did.

Qualcomm President Cristiano Amon unveils Qualcomm Snapdragon Ride platform. (Image: EE Times)

Qualcomm, meanwhile, brought its AV to Las Vegas, giving the media and its clients a chance to ride.

Jim McGregor, principal analyst at Tirias Research, who took the ride, told us, “The ride was on a highway in Vegas. The car did well at merging, navigation and even avoiding an aggressive Camaro that cut us off and almost spun out. Overall, it was a comfortable ride, and the system appeared to operate very well,” he said.

One new Qualcomm feature enables the AV to vocally announce to passengers its intent — that it is trying to merge to the right, for example. We know it will be a long time before AI can explain itself (why it arrived at a certain decision) to humans. But it’s possible now, and a good idea, for an AV to let the passengers know what it plans to do next.

9. Qualcomm-NXP: Life after the M&A was called off

It’s been 18 months since Qualcomm’s proposed acquisition of NXP Semiconductors fell through. Several chip company execs last week remarked at CES how little the unconsummated acquisition deal has affected either company.

Qualcomm soldiered on to expand its automotive business — without NXP.

NXP sans Qualcomm, meanwhile, has been beefing up its connectivity portfolio. Last year, NXP acquired Marvell’s WiFi and Bluetooth connectivity assets. NXP is also pushing ultra-wide band (UWB) as a much more precise location technology.

Still, NXP is missing cellular technologies. The company doesn’t have big AI solutions, either.

Is Qualcomm’s aggressive foray into the automated vehicle platform worrisome to NXP? Not really, according to Lars Reger, NXP CTO. “We will gladly let Nvidia, Qualcomm, Kalray and others fight for the big AI space.”

NXP CTO Lars Reger in the company’s tent at CES 2020 in Las Vegas. (Photo: Mitch Tobias / mitchtobias.com)

After all, the market for fully autonomous vehicles will remain relatively small. Further, the competition among these powerful AI chips is expected to get even more brutal because everyone wants to use 7nm or 5nm finer process node technology, which isn’t cheap.

“NXP’s focus will be on the rest of the automotive platform,” said Reger, with solutions ranging from safety, security and gateways to domain controllers and edge nodes/sensors.

10. It takes a village…

Anyone serious about the future of connectivity and shared mobility, with fully autonomous vehicles roaming the streets, must begin planning a brand-new “smart” village.

Toyota did a complete 180 before a tech audience expecting Toyota’s new AI-driven vehicles. Almost completely absent from Toyota CEO Akio Toyoda’s presentation was any mention of automobiles.

Instead, Toyoda showed artist’s renderings of a futuristic “Woven City” of 2,000 residents, nestled in the shadow of Mount Fuji.

Toyota literally leapfrogged the dream of fully autonomous vehicles and transformed it onto AI-driven smart-city concepts. Toyota’s vision includes: high-rise “blocks” surrounded by greenery, each roofed with photovoltaic tiles to convert sunlight to energy, while underground, there will be a hydrogen power plant to provide additional energy. Robots will be everywhere, “sensor-based AI detects the household’s needs before any human notices, stocking the fridge, adjusting the heat, collecting the trash, monitoring the baby, housebreaking the puppy, and polishing the doorknobs,” reported EE Times.

Autonomous vehicles turned into food trucks, delivery vehicles and shared shuttle are only parts of the story of the smart village.

Asked if this sounds too crazy, Alexander Hitzinger, CEO of VW Autonomy GmbH, said, “Not at all. That’s where the future of mobility is.” In other words, “mobility” isn’t just about going from point A to point B. It’s about the entire environment in which AVs will operate.