Top 10: AI Chip Startups at the Edge

Article By : Sally Ward-Foxton

Our top ten picks for most promising and/or interesting chip startups for AI in edge and endpoint applications.

As the industry grapples with the best way to accelerate AI performance to keep up with requirements from cutting-edge neural networks, there are many startup companies springing up around the world with new ideas about how this is best achieved. This sector is attracting a lot of venture capital funding and the result is a sector rich in not just cash, but in novel ideas for computing architectures.

Here at EETimes we are currently tracking around 60 AI chip startups in the US, Europe and Asia, from companies reinventing programmable logic and multi-core designs, to those developing their own entirely new architectures, to those using futuristic technologies such as neuromorphic (brain-inspired) architectures and optical computing.

Here is a snapshot of ten we think show promise, or at the very least, have some interesting ideas. We’ve got them categorized by where in the network their products are targeted: data centers, endpoints, or AIoT devices.

AI in the Data Center

Yes, a data center can count as ‘edge’ — depending on where it is. The key concept of edge computing is that the data is processed in (or near) the same geographical location as the data is generated or gathered. This includes gateway or hub devices, but also on-premise servers that accelerate companies’ individual AI applications. Think servers that accelerate image classification for X-rays or CT scans in a hospital or medical research facility, or gateways that receive status data from the factory floor and process it on-site.

Graphcore — Graphcore, based in Bristol, UK, hit the news when an early funding round valued the company at more than $1 billion making it the first Western semiconductor startup to be designated a unicorn.

The company’s IPU (intelligence processing unit) chip has a massively parallel architecture with more than 1,200 specialized cores, which can each run six program threads. There is also substantial on-chip memory, hundreds of MB of RAM, plus importantly, 45 terabytes of memory bandwidth. This allows entire machine learning models to be stored on the chip.

Graphcore’s IPU chip is available in a Dell server for edge compute applications.

Groq — Groq, founded in Silicon Valley by a team from Google, employs 70 people and has raised $67 million in funding to date. At SC ’19 they officially unveiled their enormous chip that is capable of 1000 TOPS (1 Peta OPS).

The company’s software-first approach means their compiler handles a lot of control functions that would normally happen in hardware, such as execution planning. Software orchestrates all the dataflow and timings required to make sure calculations happen without stalls, and making latency, performance and power consumption entirely predictable at compile time.

Groq is targeting data center applications and autonomous vehicles with its tensor streaming processor (TSP) chip. The part is sampling now on a PCIe board.

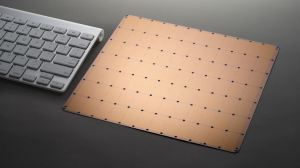

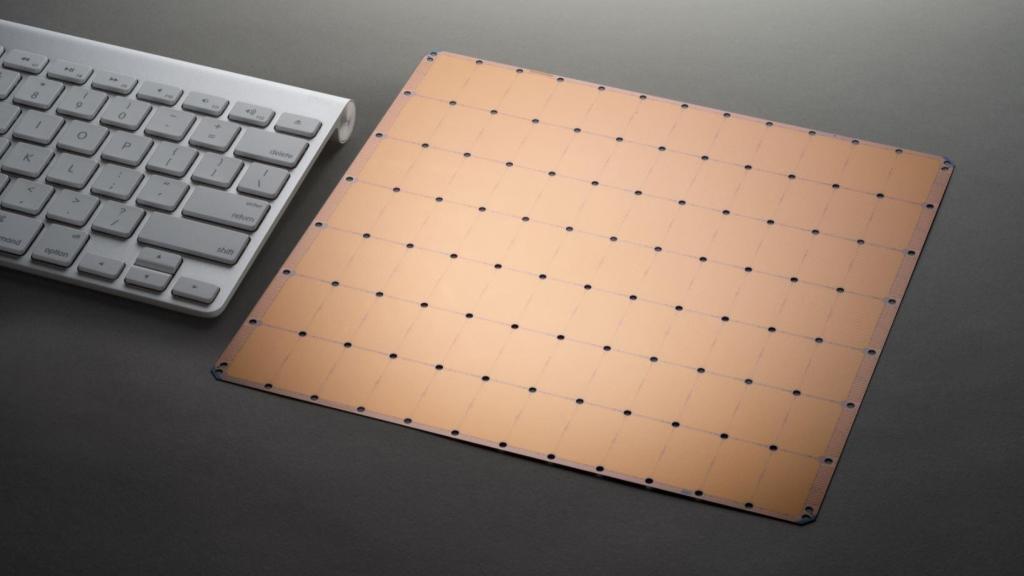

Cerebras — Cerebras is famous for resurrecting the wafer-scale chip idea which had previously been abandoned in the 1980s.

Cerebras’ enormous 46,225mm2 die, which takes up an entire wafer, consumes 15kW and packs 400,000 cores and 18GB of memory onto 84 processor tiles. While these figures may seem enormous, remember that one Cerebras chip is designed to replace thousands of GPUs.

The company says it has solved problems that previously plagued wafer-scale designs, such as yield (it routes around defects), and has invented packaging that counters thermal effects.

The company has raised more than $200 million dollars and has previously said their rack system is running in a handful of customer data centers.

Cambricon — One of China’s first AI chip companies, but by no means its last, Cambricon was founded in 2016 by two researches from the Chinese Academy of Sciences, who also happen to be brothers.

Citing the lack of agility in CPU and general purpose GPU (GPGPU) instruction sets for the acceleration of neural networks, they developed their own instruction set architecture (ISA), a load-store architecture that integrates scalar, vector, matrix, logical, data transfer and control instructions.

Cambricon’s first product, Cambricon-1A, is used in tens of millions of smartphones and other endpoint devices such as drones and wearables. Today, second-generation chips include two parts for the cloud plus an edge compute chip, the Siyuan 220. This edge chip was designed to fill a gap in the company’s portfolio for edge compute. It offers 8 TOPS performance and consumes 10W.

Cambricon (along with Horizon Robotics, see below) is currently one of the world’s most valuable chip startups: the company has raised $200m so far, giving it a market valuation around $2.5bn.

AI in the Endpoint

“Endpoint” refers to devices at the end of the network, where the data is processed inside the same device that collected the data. This might include everything from security cameras to consumer electronics and appliances. Of course, there is some grey area as some devices can be used as either gateways or endpoints (consider autonomous vehicles or smartphones).

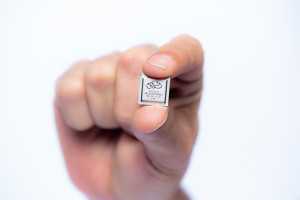

Hailo — Hailo was founded in 2017 in Tel-Aviv, Israel, by former members of the Israel Defense Forces’ elite intelligence unit. The company has around 60 employees and has raised $21 million to date.

The company’s AI co-processor, the Hailo-8, can handle 26 TOPS with a power efficiency of 2.8 TOPS/W. It is targeted at ADAS (advanced driver assistance systems) and autonomous driving applications. Its architecture mixes memory, control and compute blocks and adjacent blocks are allocated to compute each layer of a neural network by software. Minimising sending data on and off the chip helps save power.

Mass production of the Hailo-8 is due to begin in the first half of 2020.

Kneron — With 150 employees in San Diego and Taiwan and $73 million in funding, Kneron was one of the first startups to get silicon on the market back in May 2019. The company has several customers already announced for its first generation KL520 chip and made “millions of dollars” in revenue in 2019.

The KL520 is optimised for convolutional neural networks (CNNs) and can run 0.3 TOPS at 0.5W (equivalent to 0.6 TOPS/W). This is enough for facial recognition in IP security cameras, for example, but it can also be found in smart door locks and doorbells.

The company started out making neural networks for facial recognition and it now offers these alongside IP for its neural processing unit (NPU). A second generation chip is due this summer, and it will be able to accelerate both CNNs and recurrent neural networks (RNNs), the company said.

Mythic — Mythic was founded in 2012 at the University of Michigan. The company, now based in Austin, Texas, has raised $86 million to develop its analog compute chip which uses a processor-in-memory technique based on Flash transistors for power, performance and cost advantages versus CPUs and GPUs.

Processor-in-memory is not new, but Mythic says it has figured out the tricky compensation and calibration techniques that cancel out noise and allow reliable 8-bit computation. The company plans to sell standalone chips as well as multi-chip processing cards. Since the device can handle image processing on HD video at 30fps, one of Mythic’s key target markets is security cameras and on-premises aggregators for security camera systems.

Mythic CEO Mike Henry told EETimes at CES that its chip will be sampling “shortly”.

Horizon Robotics — Founded in 2015 in Beijing, China, this startup had raised around $600 million by the end of 2019, valuing the company at $3 billion. Today, Horizon Robotics has more than 500 employees and holds more than 600 patents.

Horizon Robotics’ BPU (brain processing unit) was originally designed for computer vision applications. The second generation of the BPU is a 64-bit multi-instruction multi-data (MIMD) core which can handle all types of neural networks (not just convolutional networks). It uses the company’s sparse neural networks to predict the movement of objects and scene parsing. A third generation will add acceleration for decision making algorithms and for other parts of AI outside of deep learning.

Horizon Robotics has two chip product lines: Journey for automotive, and Sunrise for AIoT applications. The first generation of Journey and Sunrise chips launched in December 2017, with a second generation based on BPU 2.0 arriving in autumn 2019. Journey 2 offers 4 TOPS at 2 W for L3/L4 autonomous driving, and combined with Horizon’s own algorithms for perception, achieves 90% core utilization.

AI in the IoT (TinyML)

In this category we consider chips with microcontroller levels of compute that operate in resource constrained environments at ultra-low power. AI inference on the endpoint device in these circumstances is very attractive since it increases latency, saves bandwidth, helps privacy and saves power associated with RF transmission of data to the cloud.

GreenWaves — GreenWaves, a spin out from the University of Bologna based in Grenoble, France, uses multiple RISC-V cores in an ultra-low power ML application processor for battery-powered sensing devices. The company relies on its custom instruction set extensions to facilitate DSP operations and AI acceleration at minimal power consumption.

GreenWaves’ second-generation product, GAP9 uses 10 cores. Of those, one is used as a fabric controller while nine make up the compute cluster, with the controller and cluster in separate voltage and frequency domains so they each consume power only when necessary. It also takes advantage of a state-of-the-art FD-SOI (fully depleted silicon on insulator) process technology to further minimise power consumption.

GreenWaves’ figures have GAP9 running MobileNet V1 on 160 x 160 images with a channel scaling of 0.25 in just 12ms with a power consumption of 806 μW/frame/second.

GAP9 samples are set to arrive in the first half of 2020.

Eta Compute — Eta Compute’s design for AI processing in ultra-low-power IoT devices uses two cores – an Arm Cortex-M3 microcontroller core plus a DSP. Both cores use the company’s clever dynamic voltage and frequency scaling techniques to run at the lowest possible power levels, which is achieved without PLLs. The AI workload can run on either core, or both (this is allocated by software). Using this technique, always-on image processing and sensor fusion can be achieved with a power budget of 100µW.

The company also optimises neural networks for ultra-low-power applications that will run on its ECM3532 device.

The company was founded in 2015, employs 35 people in the US and India and has raised $19 million funding to date. Samples are available now.