Software to Be a Major Enabler for Mems in Smart Devices

Article By : Anne-Françoise Pelé

Markus Ulm, CTO of Bosch Sensortec, details the increasing role of software in MEMS sensors.

Hardware, and particularly MEMS sensors, will remain an essential part in end devices, but moving forward, software will be equally important in bringing value to the user. Bosch Sensortec believes sensor software will become increasingly intelligent, turning MEMS sensors into more accurate and personalized systems that can help the user adapt to any situation.

“Software adds new capabilities to classical sensor components,” Markus Ulm, CTO of Bosch Sensortec (a fully owned subsidiary of Robert Bosch GmbH), told EE Times. “I am deeply convinced it is making a big difference to our industry,” fostering MEMS sensors adoption in current and new applications.

MEMS standardization

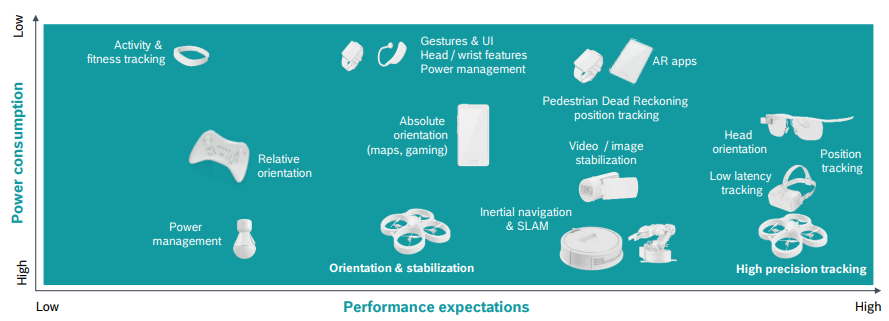

One Product, One Process is a well-known law in the MEMS industry. MEMS devices are highly customized to meet specific requirements in terms of power consumption, latency, stability and memory. For instance, VR headsets need the lowest latency while robotic vacuum cleaners require high stability at varying temperatures, while wearables require self-learning and orientation tracking at ultra-low power. “It is harsh to develop a one-size-fits-all product and have enough coverage to really make a commercially viable case,” said Ulm.

Moreover, progress toward process standardization is hardly apparent, marking a significant difference with conventional semiconductor process. “There hasn’t been any improvement and advancement toward MEMS standardization,” Damianos Dimitrios, a tech market analyst with Yole Développement (Lyon, France), told EE Times. “It has been like this for the past twenty to thirty years, with people speaking of standardization, but it is not happening. That’s why business models are different for MEMS foundries and CMOS foundries.”

Software, Ulm claimed, is one of the major enablers to get over this paradigm and make commercial sense.

Smarter and smarter

Software has come a long way from reading out sensor data to evaluating data and eventually learning and making local decisions based on the data.

“The combination of software and hardware leads to new ways of creating sensors and to new ways of sensing,” said Ulm.

If the hardware components include more than one type of sensors, the software components bring the various raw measurements together and turn the results into more valuable information. “So this whole system is able to generate a higher level of information regarding sensing compared to sensor components.” With Bosch Sensortec’s BME680 gas sensor, for instance, “if you just use the sensor raw data, you wouldn’t be able to classify different gases. The techniques that are employed in a gas sensor to do the temperature spectroscopy are enabled by software.”

Sensor fusion is the software that intelligently combines and evaluates data from several sensors to improve application or system performance. In practice, however, it’s not so simple and straightforward to have multiple sensors work together and collect useful data.

“To ease the sensor fusion process, you either need to increase your computing power or to create some machine learning algorithms to process all this data, to do the classification, [to know] what data is coming from which sensor and what the data is telling me,” Ulm said.

Also challenging is the processing power: “You need more power to crunch your data, but in the end, you need to reduce your power consumption so that your devices can last long,” said Damianos. This is particularly true in consumer applications.

“Sensor fusion is not at its limits and requires further research and development,” noted Ulm. Bosch’s approach consists in leveraging AI and software synthesis to make consumer electronic devices smarter. “Software synthesis refers to ways of automatically generating code based on domain knowledge and given constraints for specific product versions,” Ulm commented. “Sensor fusion techniques enable a level of automation that creates new opportunities for more complex sensor fusion, formerly out of reach when using traditional approaches that involved, for example, big data and a large number of potential data sources.”

Recommended

Paper Could Be the Future of MEMS

Software adds value not only to the sensor, but to the entire system. It is also becoming increasingly intelligent, enabling AI inside the MEMS sensor itself. “We have been hearing a lot about AI, but I would like to advocate for edge AI as a solution for the good of our industry and for the acceptance of use cases,” said Ulm. Having AI locally, directly in the MEMS device, would help develop new applications and be beneficial for the users.

Running AI algorithms at the edge indeed offers several user benefits. The first one is personalization, where calculation is performed locally and optimized for the user based on his or her individual behavior. Second is the privacy of user data. Data, Ulm explained, is kept private due to edge data processing without cloud involvement. The third benefit is real time feedback. “By passing stuff to the cloud and getting it back, you experience some latency and, in many cases, it is unwanted.” Executing at the edge avoids data transfer and reduces latency. Fourth is battery life improvement by doing local processing.

Edge AI, however, remains subject to critical success factors. “It’s essential to understand and control not only the data but also the algorithms, especially for edge AI,” said Ulm. “We need three things to make edge AI successful,” starting with algorithms that operate on devices at the edge under constraints. “It’s important to understand these algorithms and to develop new algorithms that are able to operate under such conditions.” Second is the data, which is generated in real time and has to be processed in real time. The third one is the context, which means the data needs to be interpreted in such a way that it makes sense for the particular use case and delivers value in a particular situation, Ulm explained. “That value could be, for instance, real time feedback on how to do things differently based on the situation.”

Edge AI is still at an early stage of its development. Various challenges have not yet been overcome as the machine learning community has been mainly focused on cloud-based solutions to address big data and large scale problems, said Ulm.

Primary challenges are power consumption and Moore’s Law limitations, noted Damianos. “We all know that Moore’s Law is slowly dying. We are not sure it will die, but at least it will slow down, imposing some physical boundaries to how many transistors you can fit in your processor unit.” Another identified challenge is data privacy regulation. “In Europe, we have GDPR, but we need to see what happens with other continents.”

Asked how MEMS sensor companies can make a difference with edge AI and prove their legitimacy over cloud providers, Ulm cited the many use cases where it is possible and beneficial to generate customer value at the edge very close to the sensor. “Here MEMS sensor companies can bring in their competencies in dealing with constraints at the edge to enable local machine learning solutions.” Above all, “we know the working functions in sensors.”