News FPGAs Target Xilinx’s Versal, Intel’s Agilex

Article By : Rick Merritt

The Speedster7t high-end FPGA family is geared for deep learning and aims to be simpler and lower-cost than Xilinx's Versal and Intel's Agilex

Achronix is back in the game of providing full-fledged FPGAs with a new high-end 7-nm family, joining the Gold Rush of silicon to accelerate deep learning. It aims to leverage novel design of its AI block, a new on-chip network, and use of GDDR6 memory to provide similar performance at a lower cost than larger rivals Intel and Xilinx.

Achronix debuted in 2004 with an asynchronous design, one of a handful of ambitious FPGA startups at the time. Today, it is the sole survivor thanks to several generations of clever designs and nimble shifts, such as a recent turn to selling embedded FPGA blocks rather than full chips.

The Speedster7t marks the company's return to FPGA chips, targeting the hot market for AI acceleration. Achronix claims that its mid-range 1500 chip can use ResNet-50 and Yolov2 neural-network models to process 8,600 and 1,600 images/second, respectively.

Like its many rivals, the company aims for users such as hyperscale data centers and large networking and storage OEMs. It will start to ship chips and PCIe Gen 5 accelerator boards before the end of the year.

By the time the first hardware ships, it hopes to have software ready to program it directly from TensorFlow. If it has resources and user demand, it will work on support for some of the many other AI frameworks as well as the P4 language. For traditional FPGA users, it will offer its existing RTL programming tools.

“It appears they have a lead customer who has signed up for FPGAs, otherwise they wouldn’t go down this path,” said Bob Wheeler, a senior analyst with the Linley Group. “I believe they had one large lead customer for their past product and likely got them to sign up for the next one.”

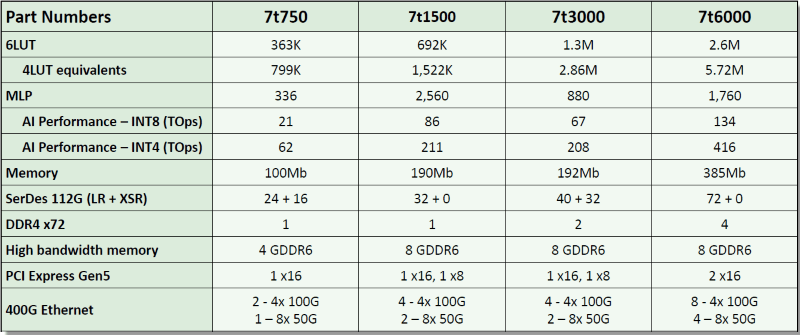

The Speedster7t line includes four chips using from 363,000 to 2.6 million six-input lookup tables as well as a number of hardened blocks for high-speed interfaces, including 400 Gigabit Ethernet. That puts it on par with the competition. However, the 150-person company, with annual revenues of about $100 million, competes with rivals Intel and Xilinx that rake in $2 billion to $3 billion a year in FPGAs.

Xilinx is preparing to ship its Versal, a 7-nm family also sporting AI accelerator blocks as well as Arm cores and more. For its part, Intel recently disclosed that its 10-nm Agilex will ship next year, supporting its proprietary EMIB links for standard and custom chiplets. Microsoft already uses existing Intel FPGAs as accelerators on its data center servers.

With constrained resources, Achronix will not attempt to compete with either company for chips with cache-coherent links to a processor. Xilinx helped launch the CCIX interface in 2016, and in March, Intel launched a competing initiative for the CLX link.

The moves create ecosystems that Achronix cannot rival. However, “coherency hasn’t been a big part of the market, and it’s an open question if it ever will be,” said Wheeler. “My take is it will not be big for inference jobs where these parts will be used, but it will be more for training where Nvidia dominates.”

The Speedster7t line aims at similar performance for lower cost, greater flexibility, and easier implementation than its rivals.

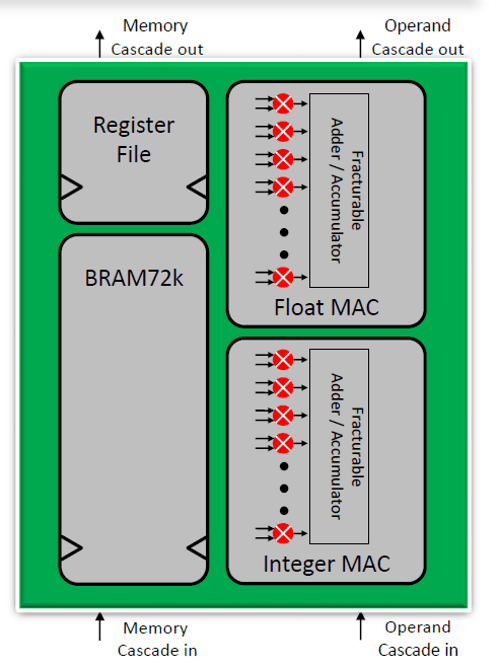

For example, its AI block can be configured to support a range of formats, including 4- to 24-bit integer, as well as 16- and 32-bit floating point, and the emerging bfloat format. It is a hardened version of the embedded core launched late last year. The blocks run at 750 MHz, with their number varying by the format chosen.

The blocks have similar performance to the vector engines in Versal, said Steve Mensor, vice president of marketing for Achronix. Some users will prefer the vectors engines and Arm cores in Versal, but “for those who don’t, we are superior.”

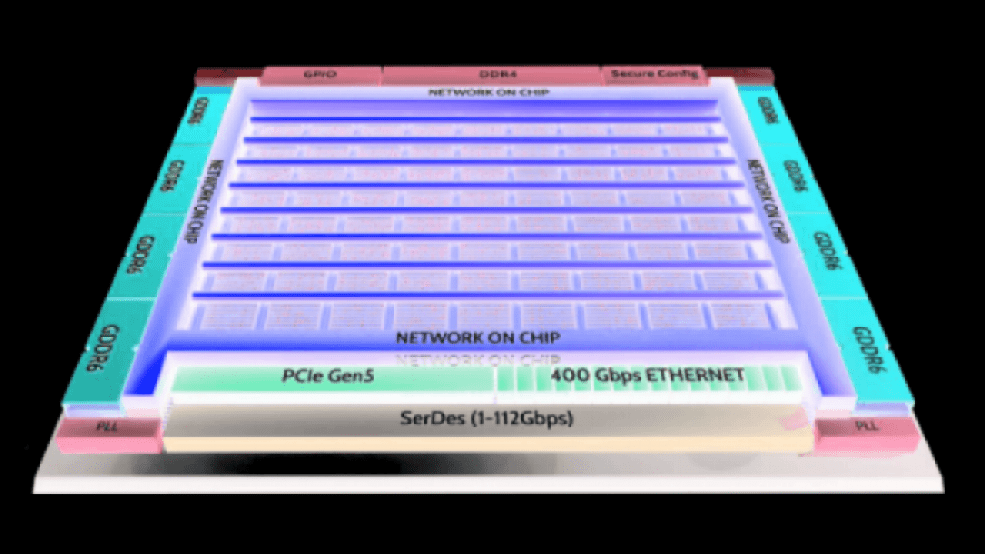

Achronix claims that its new 2D on-chip network is a major differentiator. It is integrated throughout the chip’s fabric compared to rival chips that link such networks only to major high-bandwidth blocks.

Each row or column in the network is implemented as two 256-bit AXI channels running at 2 GHz. That gives users access to a default link delivering 512 Gbps to their custom blocks.

As a result, users “just build accelerators, not the connectivity to functions,” said Mensor. The on-chip network was the brainchild of a senior engineer at Achronix (who Mensor left unnamed) with a history that includes work on FPGAs at Altera and on-chip networks at Arteris, he added.

Xilinx pioneered use of TSMC’s CoWoS to deliver dense memory to its FPGAs. Achronix takes a lower-cost route, putting hardened GDDR6 blocks on the Speedster7t.

Up to eight GDDR6 controllers offer up to 4-Tbits/s throughput and about as much memory as rivals pack. The approach lowers costs as much as five-fold, although its external memory requires more board space.

The Speedster7t can support up to 72 embedded 1-112G SerDes. Both Intel and Xilinx are offering an option of implementing such blocks as separate die in chip stacks.

The new chips are made in TSMC’s N7 node. Achronix was the first to use Intel’s foundry service at 22 nm, but the process took longer to bring up than expected, and 14-nm and 10-nm nodes were even more delayed.

“We missed the opportunity to compete with parts made in TSMC’s 16-nm node,” said Mensor, partly explaining its motives to switch to supplying embedded FPGA cores.

He downplayed the lack of embedded Arm cores, something that both Intel and Xilinx offer. “We believe our job is accelerating CPUs instead of trying to be the center of the system,” he said.