Neuromorphic Looks Set to Improve AI

Article By : Anne-Françoise Pelé

Neuromorphic techniques will be the path toward progress, according to market research firm Yole Developpement.

When Apple CEO Tim Cook introduced the iPhone X, he claimed it would “set the path for technology for the next decade.” While it is too early to tell, the neural engine used for face recognition was the first of its kind. Today deep neural networks are a reality, and neuromorphic appears to be the only practical path to make continuing progress in AI.

Facing data bandwidth constraints and ever-rising computational requirements, sensing and computing must reinvent themselves by mimicking neurobiological architectures, claimed a recently published report by Yole Développement (Lyon, France).

In an interview with EE Times, Pierre Cambou, Principal Analyst for Imaging at Yole, explained that neuromorphic sensing and computing could solve most of AI’s current issues while opening new application perspectives in the next decades. “Neuromorphic engineering is the next step towards biomimicry and drives the progress towards AI.”

Why now?

Seventy years have passed since mathematician Alan Turing posed the question “Can machines think?”, and thirty years since Carver Mead, an electrical engineer at the California Institute of Technology, introduced the concept of neuromorphic engineering. In the next decade, however, researchers experienced little practical success in building machines with brainlike ability to learn and adapt. Hope resurged when Georgia Tech presented its field programmable neural array in 2006 and MIT researchers unveiled a computer chip that mimics how the brain’s neurons adapt in response to new information in 2011.

The turning point was the publication of the paper, “ImageNet Classification with Deep Convolutional Neural Networks” by a group of scientists from the University of Toronto. The AlexNet architecture, comprising of an 8-layer convolutional neural network, made it possible to classify the 1.2 million high-resolution images in the ImageNet contest into one of the 1,000 categories (e.g. cats, dogs). “It is only with the development of AlexNet that the deep learning approach proved to be more powerful and started to gain momentum in the AI space.”

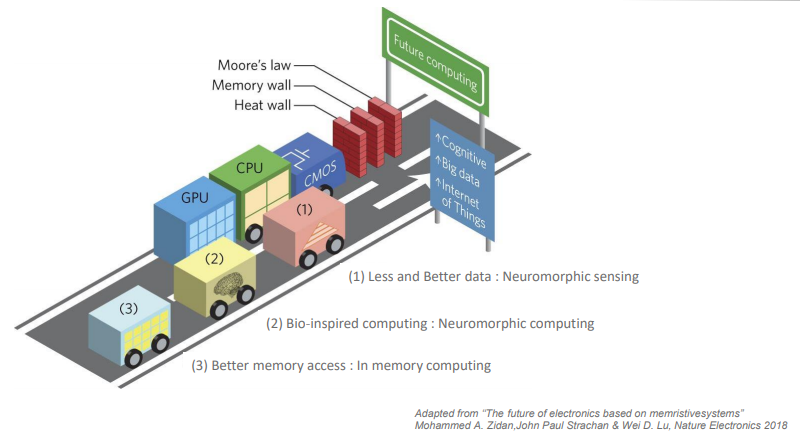

Most current deep learning implementation techniques rely on Moore’s Law, and “it works fine.” But, as deep learning evolves, there will be more and more demand for chips that can perform high computational tasks. Moore’s Law has been slowing down lately, and has led many in the industry, including Yole Développement, to believe it won’t be able to sustain deep learning progress. Cambou is among those that believe deep learning “will fail” if it continues to be implemented the way it is today.

To explain his point of view, Cambou cited three main hurdles. The first is the economics of the Moore’s Law. “Very few players will be able to play, and we will end up with one or two fabs in the world going beyond 7nm. We think it is detrimental to innovation when only Google is able to do something.”

Second, the data load is increasing faster than Moore’s Law, and the data overflow makes current memory technologies a limiting factor. And third, the exponential increase in computing power requirements has created a heat wall for each application. “With 7nm chips, we roughly have an efficiency of one teraflop per watt. To power a Waymo, we probably need one kilowatt, which means we need one thousand teraflops,” said Cambou. The current technology paradigm is unable to deliver on the promise, and the solution could be to apply deep learning on neuromorphic hardware and take advantage of the much better energy efficiency.

Taking a broader look at the current situation, Cambou said it is time for a disruptive approach that utilizes the benefits derived from emerging memory technologies and improves data bandwidth and power efficiency. That is the neuromorphic approach. “The AI story will keep on moving forward, and we believe the next step is in the neuromorphic direction.”

In recent years, there have been many efforts in building neuromorphic hardware that conveys cognitive abilities by implementing neurons in silicon. For Cambou, this is the way to go as “the neuromorphic approach is ticking all the right boxes” and allows far greater efficiencies. “Hardware has enabled neural networks and deep learning, and we believe it will enable the next step in neuromorphic AI. Then we can dream again about AI and dream about AI-based applications.”

Neurons and synapses

Neuromorphic hardware is moving out of the research lab with a convergence of interests and goals from the sensing, computing and memory fields. Joint ventures are being formed, strategic alliances are being signed, and decade-long research initiatives such as the European Union’s Human Brain Project are being launched.

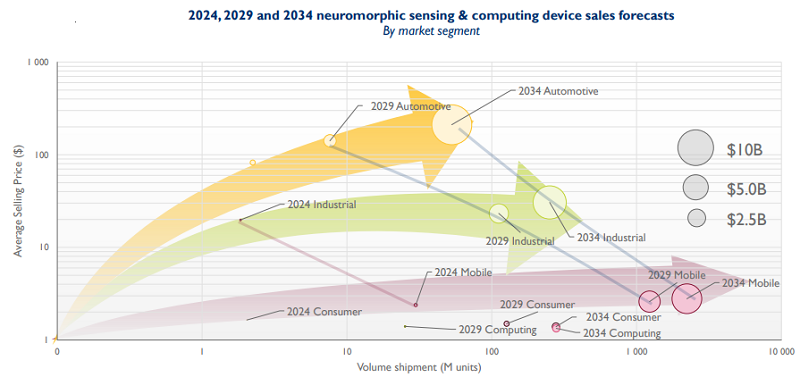

While no significant business is expected before 2024, the scale of the opportunity could be significant for decades after that. According to Yole, if all technical questions are solved in the next few years, the neuromorphic computing market could rise from $69 million in 2024 to $5 billion in 2029 and $21.3 billion in 2034. The ecosystem is large and diverse with prominent players like Samsung, Intel, and SK Hynix, as well as startups such as Brainchip, Nepes, Vicarious and General Vision.

Neuromorphic chips are no longer a theory, but a fact. In 2017, Intel introduced Loihi, its first neuromorphic research chip composed of 130,000 neurons. In July, the Santa Clara group hit a new milestone with its 8 million neuron neuromorphic system, codenamed Pohoiki Beach, comprising 64 Loihi research chips. Similarly IBM’s TrueNorth brain-inspired computer chip has 1 million neurons and 256 million synapses and Brainchip’s Akida neuromorphic system-on-chip has 1.2 million neurons and 10 billion synapses.

“There is a race for providing hardware that would raise the bar in terms of neurons and synapses. Synapses are probably more important than neurons,” said Cambou. “At Yole, we see two steps ahead of us. First, the applications that will be built upon the current approach, in part asynchronous and in part from Von Neumann.” Good examples are Brainchip’s Akida and Intel’s Loihi. “Then, probably within the next 10 to 15 years, we will get RRAM [resistive random-access memory] on top of it. That will allow for more synapses to be created.”

Neuromorphic computing efforts come from memory players like Micron, Western Digital and SK Hynix, but many are seeking more short-term revenues and ultimately may not become strong players in the neuromorphic research. “We should look at small players that have chosen neuromorphic as their core technology,” Cambou said.

Disruptive memory startups such as Weebit, Robosensing, Knowm, Memry, and Symetrix are combining non-volatile memory technology with neuromorphic computing chip designs. They have emerged alongside pure-play memory startups such as Crossbar and Adesto, but their memristor (memory resistor) approach is often perceived as more long-term than efforts from pureplay computing companies. “A lot of memory players are working on RRAM and phase-change memories to mimic the synapse,” said Cambou. Also, “the MRAM [magnetoresistive random access memory] is part of the emerging memories that will help the neuromorphic approach to succeed.”

Besides computing, a neuromorphic sensing ecosystem has emerged, with its roots originating from the invention of a Silicon Neuron by Misha Mahowald at the Institute of Neuroinformatics and ETH Zurich in 1991. Current competition is low, with less than ten players globally. Among them, Prophesee, Samsung, Insightness, Inivation and Celepixel are providing ready-to-use products such as event-based image sensors and cameras. The frame-based approach, as used in cinematography, is unable to capture motion.

“Cinema is tricking our brain, but we can’t trick a computer,” said Cambou. “The only right way to do it is to give the same information the eyes are giving. Event-based cameras are very strong for any kind of motion understanding and pattern understanding in real time.” More broadly, auditory, imaging and behavioral sensors have “an impact at every level of what we call general intelligence.”

At the packaged semiconductor level, Yole said it expects neuromorphic sensing to grow from $43 million in 2024 to $2 billion in 2029 and $4.7 billion in 2034.

Automotive, but not only

Automotive is probably the most obvious market, said Cambou. Initial markets are, however, industrial and mobile, mainly for robotics and real-time perception.

In the short term, neuromorphic sensing and computing will be used for always-on monitoring of industrial machines. It will also play a major role in logistics, food automation and agriculture. “While deep learning needs huge data sets, neuromorphic learns extremely quickly from only a few images or a few words and understands time,” said Cambou.

Within the next decade, the availability of hybrid in-memory computing chips should unlock the automotive market, desperately awaiting a mass market autonomous driving technology. “We live in a world of interactions, and neuromorphic will be very strong in giving computers the understanding of unstructured environments.”