Neuromorphic Computing System Reaches 100 Million Neurons

Article By : Sally Ward-Foxton

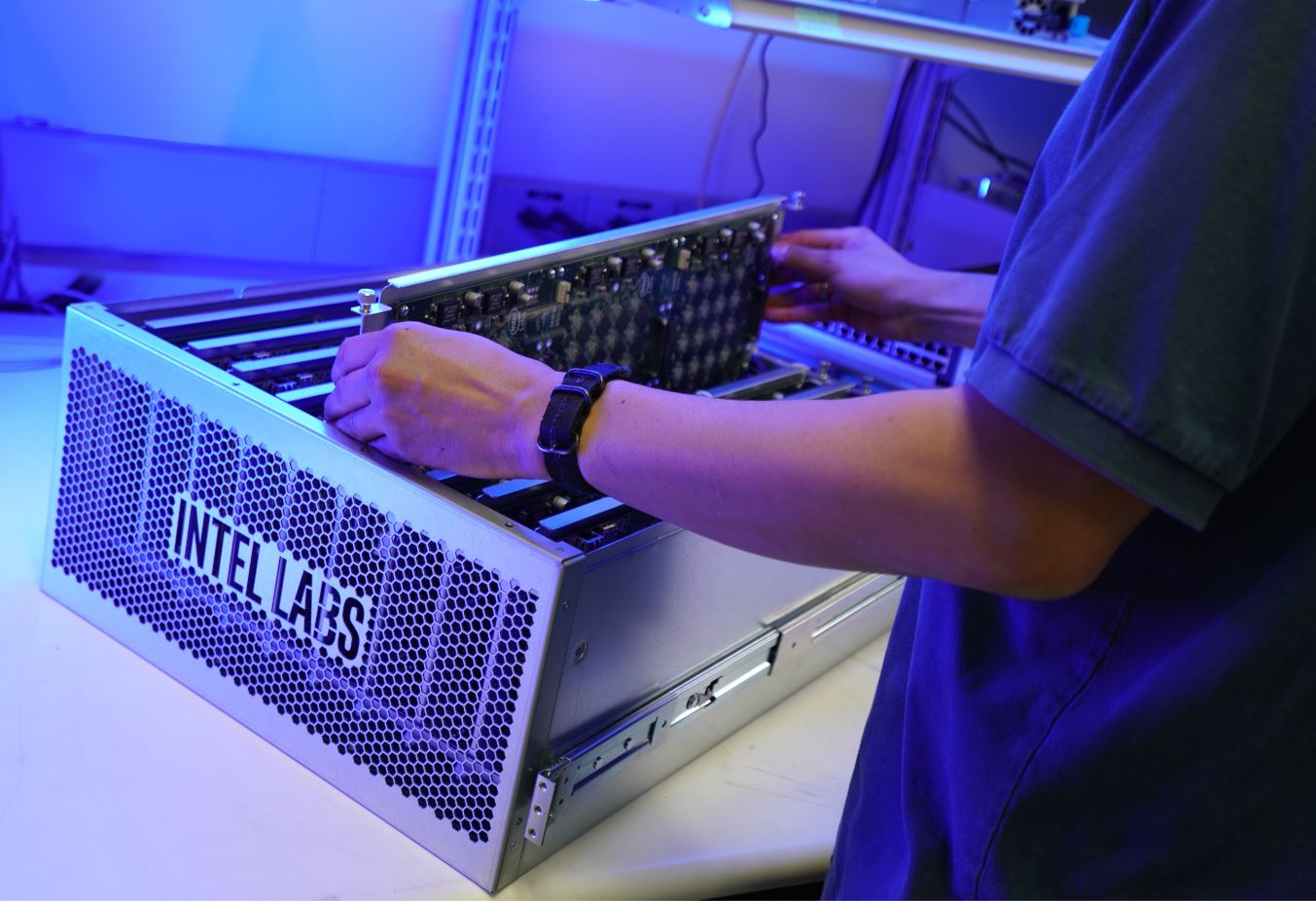

Intel has scaled up its neuromorphic computing system by integrating 768 of its Loihi chips into a 5 rack-unit system called Pohoiki Springs.

Intel has scaled up its neuromorphic computing system by integrating 768 of its Loihi chips into a 5 rack-unit system called Pohoiki Springs. This cloud-based system will be made available to Intel’s Neuromorphic Research Community (INRC) to enable research and development of larger and more complex neuromorphic algorithms. Pohoiki Springs contains the equivalent of 100 million neurons, about the same number as in the brain of a small mammal such as a mole rat or a hamster.

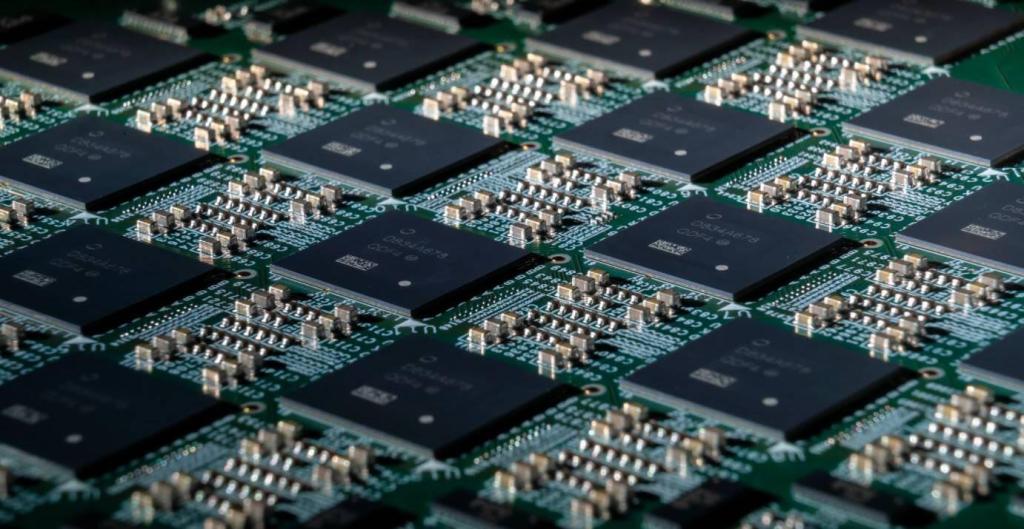

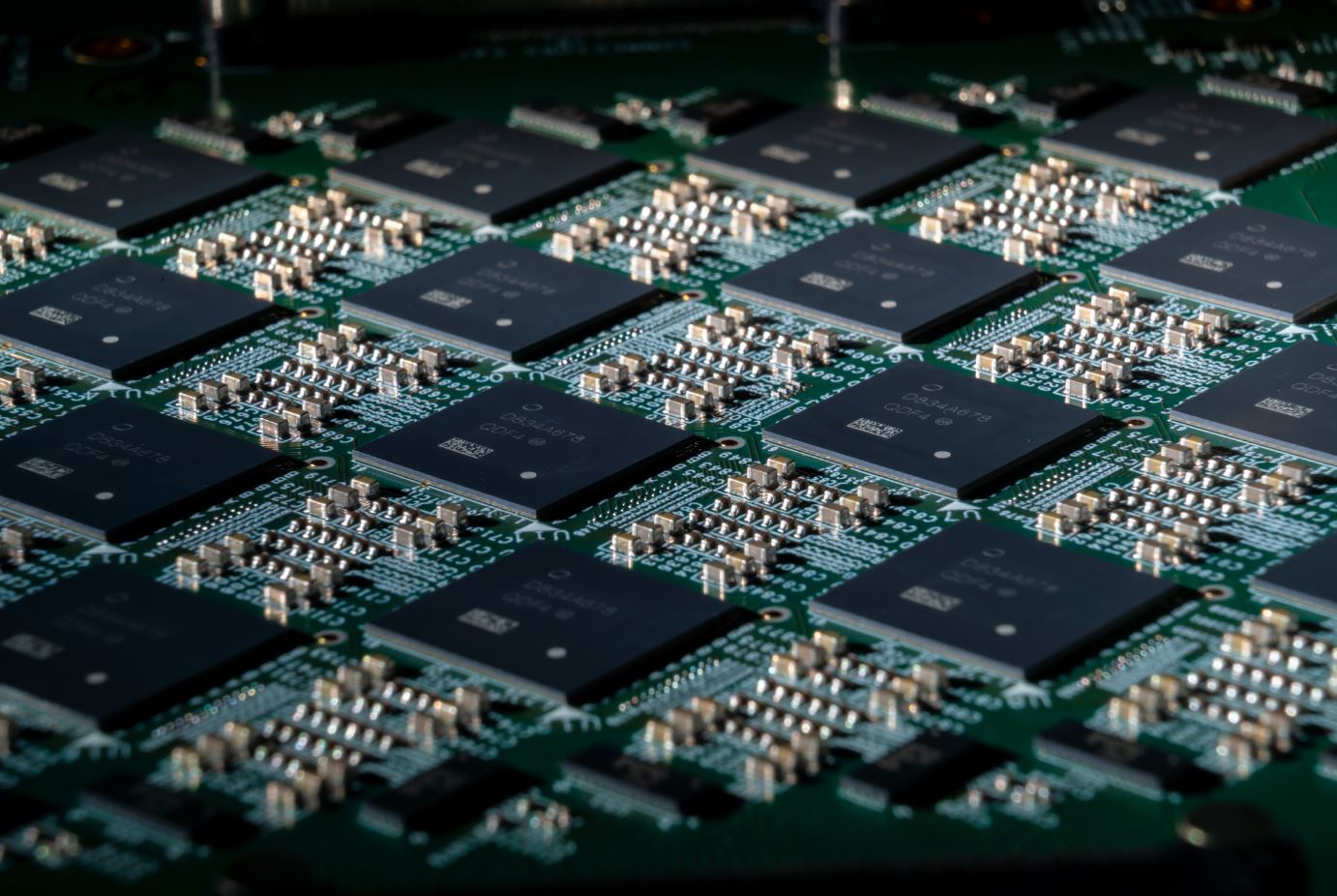

Neuromorphic Chip

Intel debuted its Loihi neuromorphic chip for research applications in 2017. It mimics the architecture of the brain, using electrical pulses known as spikes, whose timing modulates the strength of the connections between neurons. The modulation of these strengths is analogous to how weights affect the impact of parameters in an artificial neural network.

Loihi’s architecture uses extreme parallelism, many-to-many communication and asynchronous signals to mimic the brain’s structure (there are no multiply-accumulate units). The aim is to provide performance improvements for special brain-inspired algorithms at dramatically reduced power levels.

“We’re computing neural networks in a completely different manner, in a way that’s more directly inspired from how neurons actually process information,” said Mike Davies, director of Intel’s Neuromorphic Computing Lab. “That’s through what’s called spikes — neurons activate and they send messages in an asynchronous event-driven manner across all of the neurons in the chip, processing information in a very different way.”

Loihi, Davies explained, is not directly comparable to conventional AI accelerators such as those produced by Habana Labs, a recent Intel acquisition.

“Neuromorphic computing is useful for a different regime, a different niche in computing than large data, supervised learning problems,” Davies said.

Traditional deep learning uses large amounts of well-labelled data to train enormous networks, at great computational cost. This drives huge I/O bandwidth and memory bandwidth requirements required to update the model weights. It’s also relatively slow.

“Neuromorphic models are very different to that,” Davies said. “They’re processing individual data samples. The batch size one regime, we call it, where real world data is arriving to the chip and it needs to be processed right then and there with the lowest latency and the lowest power possible. …What’s different on the edge side compared to even edge deep learning AI chips is that we’re also looking at models that adapt and can actually learn in real time based on those individual data samples that are arriving which the deep learning paradigm does not support very well.”

Essentially, deep learning accelerators and neuromorphic computing are complementary technologies solving different types of problems.

Scaling Up

Intel’s previous work in this area had produced smaller systems, such as Kapoho Bay (2 Loihi chips, 262,000 neurons — about the same number of neurons as a fruit fly). Kapoho Bay is intended for the development of algorithms for edge systems, and it has been demonstrated running gesture recognition in real time, reading braille and orienting itself using visual landmarks. It consumes just tens of milliwatts for these types of applications.

Then there is Nahuku, Intel’s Arria 10 expansion board with 8-32 Loihis (Nahukus were used for Intel and Cornell’s olfactory sensing experiment), and Pohoiki Beach, announced last summer, which has 64 Loihi chips.

The new system, Pohoiki Springs, uses 8 boards, each with 96 Loihi chips (plus three Arria 10 FPGA boards used for I/O). Spike-based signalling is used between all of the Loihi chips in the system and the whole 5U box consumes just 300W – about the same as you’d expect from a 1U system using traditional computing.

Algorithmic Applications

Intel has more than 90 research groups signed up for its neuromorphic research community, including academic, government and industry groups. They mostly focus on developing algorithms, Davies said, but recently added commercial members including Accenture, Airbus, GE and Hitachi who will eventually apply these algorithms to problems that are relevant for their businesses.

Pohoiki Springs will initially be used to develop larger, more complex, more advanced brain-inspired algorithms. Some of the most interesting applications so far are ones that the human brain achieves easily but are computationally difficult for computers.

For example, neuromorphic algorithms are great at searching graphs for optimal paths, such as finding the shortest route between locations to provide driving directions (many routes are compared in parallel). Searching for patterns can also be achieved efficiently — this might mean searching image and video databases for patterns. Constraint satisfaction problems, which apply to everything from solving sudokus to making airline timetables, are also well served by neuromorphic algorithms. And optimization tasks which maximize certain objectives, such as controlling traffic lights to minimize urban traffic, have obvious uses in the real world.

“The goal here is that we want to solve these kinds of hard problems much faster, or with significantly lower energy that conventional methods,” Davies said. “We have examples now that are a hundred times faster than conventional solutions on CPUs, or thousands of times lower energy. We’re interested in seeing what we can get as we explore this direction to scale up.”

Future Brains

Looking further into the future, commercializing this technology is still rather a way off.

“The challenges right now are purely on the algorithmic and ultimately, the software fronts,” he said. “There’s certainly more to be done on the hardware, Loihi itself is not in a form that could literally be commercialized, but the challenges there are mostly engineering and not basic research. Where the field is really focused on [today] is discovering new algorithms for solving problems, and finding the broadest possible set of those algorithms.”

Davies added that the first commercial applications will be in edge processing, and he singled out the emerging area of event-based cameras as a likely forerunner. This application is actually where neuromorphic computing began in the 1980s; it uses image sensors that natively produce spikes (Samsung, Sony, and startups such as Prophesee are already making these sensors). The most promising domain for event-based cameras is automotive advanced driver assistance systems (ADAS), since rapid response to changes in visual information are required with a limited power budget.

Loihi itself is in a sweet spot where it can scale into larger systems by using multiple chips, but one chip isn’t too big to be useful in edge applications. Davies expects future commercial neuromorphic products will be split into those that cater for data centers and those that are intended for the edge, similar to the division in today’s AI accelerator offerings.

Future data center systems could grow far beyond Pohoiki Springs.

“We’re really interested in scaling up this architecture over time to go after what we find in nature, which is ever-increasing compute capability as we find larger and larger brains. So starting with Loihi, building up to mammalian size brains, and even further in the future,” Davies said.

The human brain has 86 billion neurons (about 650,000 Loihi chips): watch this space.