Choosing Between AI Accelerators

Article By : Sally Ward-Foxton

And do you even really need one?

As more and more companies begin to use machine learning as part of normal business operations, those investing in their own hardware for whatever reason are now faced with a choice of different accelerators as this ecosystem begins to expand. When choosing between the very different chip architectures that are coming to the market, performance, power consumption, flexibility, connectivity and total cost of ownership will be the obvious criteria. But there are others.

Alexis Crowell (Image: Intel)

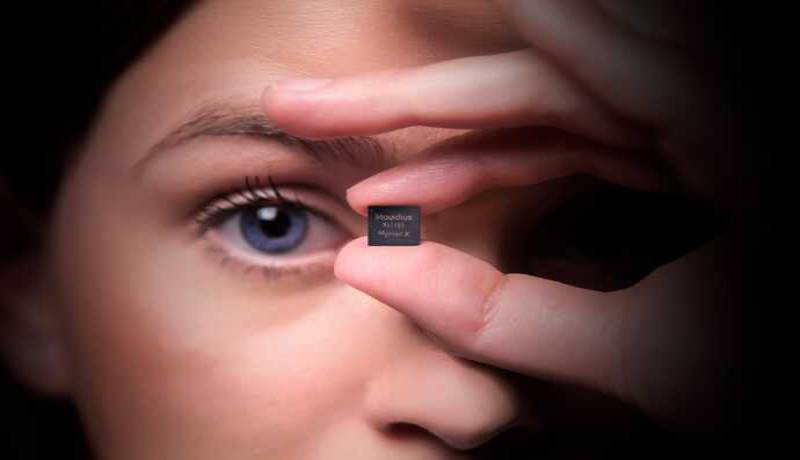

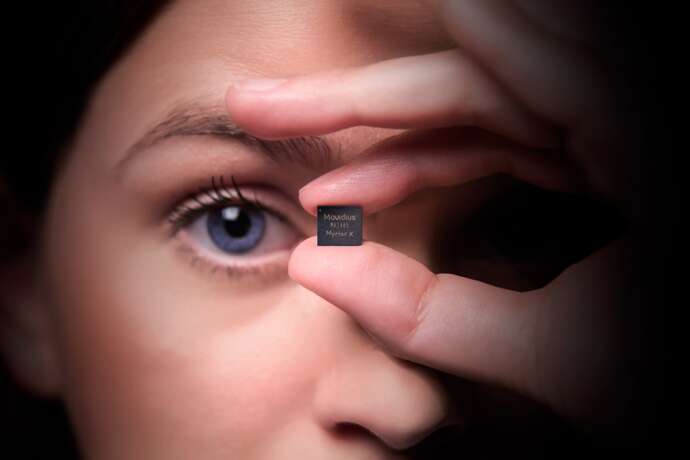

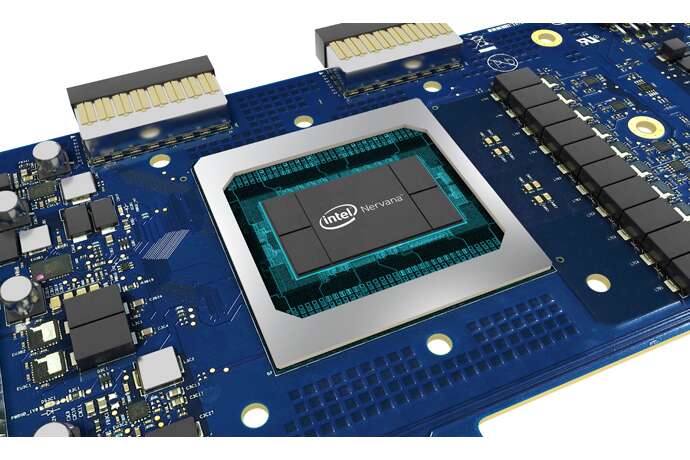

Last week I spoke with Alexis Crowell, Intel’s senior director of AI product marketing, on this topic. Intel offers various AI accelerator products with completely different architectures (including, but not limited to, Movidius, Mobileye, Nervana, Loihi, not to mention all the CPU products). Crowell was happy to highlight some of the less obvious criteria that should be considered when choosing an AI accelerator.

Do you really need an AI accelerator?

One of the least obvious questions that should be the most obvious: does your application really demand the latest AI accelerator ASIC?

“This is a very common problem, especially because AI is such a buzzword,” Crowell said. “People really want to do AI, but a lot of people don’t really know what that means, nor do they know where to start.”

Intel’s experience of guiding customers through the process reveals that, outside of the data centre hyperscalers and big cloud service providers, most customers are not yet ready for AI acceleration. The majority will need to spend a lot of time getting their data ready, Crowell said.

“If you’re trying to clean up your data, you don’t need to be investing in expensive accelerator hardware to do that cleanup,” she said. “A lot of our conversations with customers are about figuring out where they are in that process.”

Crowell also said that some data centre customers may also have plenty of spare CPU cycles available — in that case, why spend the money?

How mature is the software stack?

Every new chip architecture will need to be programmed somehow. While no-one is releasing a chip without a corresponding toolchain today, the maturity of these software stacks is a factor for consideration.

“What will be really interesting with all the startups coming to the market is: the software stack for AI is hard,” Crowell said. “Intel has 25 years’ history writing and working in software and we have hundreds of people working on software across our products. I think the integration into frameworks that people are already using, or compiler stacks or whatever that looks like, that is really important for people to understand as they make hardware decisions.”

Intel’s Movidius Myriad X vision processing unit (VPU) targets drones, robotics and smart cameras (Image: Intel)

Have you thought about security?

“Security today is more important than it’s ever been,” Crowell said, highlighting security of the data and security of the model as equally critical.

“There is a lot of security that should go into the data itself to make sure it’s really the data pool or really the population of data that you think it is,” she said. “How are you securing the data ahead of training to make sure that you’re not getting errant input? [How do you know] somebody isn’t weaving out one subset of data so that it starts to spin outcomes?”

Security of the model is just as important. Once the model is deployed in the wild (in an end device), it will need to be protected from attackers who may try to hack it, changing the model weights to manipulate the outcome, or even just stealing your IP (your model!).

“One of the chips we’re building for inference, we built in RAS [reliability, availability and serviceability] features specifically to help with this problem,” she said. “Because we look at security and AI as foundational, it can’t be that you build a solution and then retrofit it for security. You have to do that from the start.”

Is this chip benchmarked?

Suitable benchmarking for AI accelerators is still at the early stages. Intel is one of the few companies to have submitted results to MLPerf, and also to Baidu’s DeepBench. While Crowell stressed that Intel wants its customers to be able to make fair comparisons between products, and that benchmarks are a good starting point, she expressed some frustration with the models today’s benchmarks are often based on.

Intel’s Nervana neural network processor comes in training and inference versions (Image: Intel)

“ResNet and MobileNet today are kind of starter topologies and are not indicative of how people actually use AI in the real world,” she said. “But I think it’s a good foundation to try and get everybody on the same page, because with AI there are so many [variables] — batch size, latency requirements, accuracy requirements… you have to start somewhere. Starting with ResNet and MobileNet doesn’t mean we can’t grow into some of the more modern, bigger models that are more apropos of what really happens.”

Crowell’s final point was that benchmarks should be considered as part of the wider picture, one that takes into account all the issues addressed here.

“I always caution customers to not use benchmarks as their entire decision criteria,” she said. “There are so many factors — like everything in life, it’s nuanced.”