AI: There’s More to Chip Performance Than TOPS

Article By : Sally Ward-Foxton

What is the best measure of inference performance?

In the world of AI accelerators, chip performance is frequently quoted in tera operations per second, or TOPS, for a given algorithm. But there are many reasons why this may not be the best figure to look at.

“What customers really want is high throughput per dollar,” said Geoff Tate, CEO of AI accelerator company Flex Logix.

Geoff Tate, Flex Logix

Geoff Tate, Flex Logix

(Image: Flex Logix)

Tate explained that having more TOPS doesn’t necessarily correlate with higher throughput. This is particularly true in edge applications where the batch size is 1. Applications such as data centers may increase their throughput by processing multiple inputs in parallel using larger batches (since they have TOPS to spare), but this is not often suitable for edge devices.

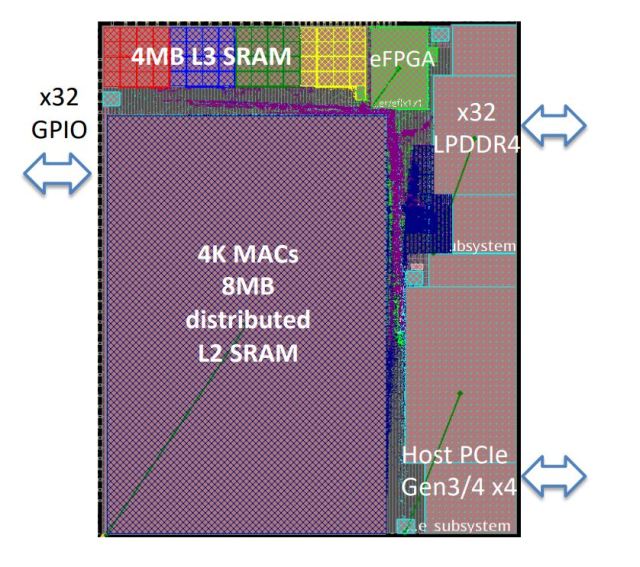

For example, Tate compared Flex Logix’ InferX X1 device with a market-leading GPU device. While the GPU offers three to four times the throughput, with 10x the TOPS, it uses eight times the number of DRAMs. Tate argued that this makes Flex Logix’ architecture a lot more resource-efficient.

Tate’s proposed metric, throughput per dollar, sounds sensible but in practice, it’s not always easy to find reliable cost information for products to allow direct comparisons. Factors such as how much DRAM is required, or how much silicon area a particular chip has can be an indicator of cost, but are by no means precise.

ResNet-50

Another problem with TOPS as a metric is that it’s often measured when running ResNet-50.

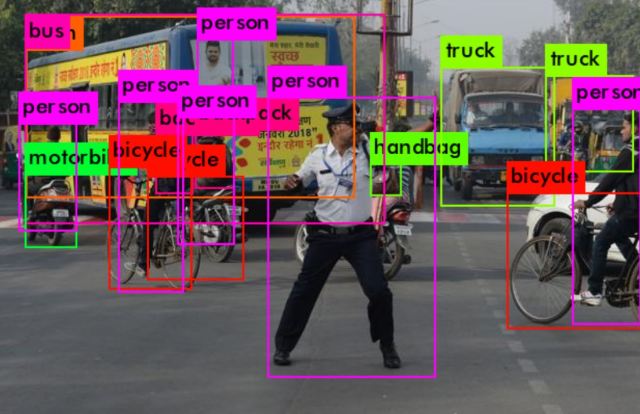

“ResNet-50 is not the benchmark that customers care about, but it is the one that people report the most often,” Tate said. “The reason it’s not very relevant is it uses very small images.”

Now largely seen as out of date, ResNet-50 has been around for some time and has become a de facto standard for quoting TOPS figures. There are good reasons for continuing to use it as a standard; these include trying to keep all scores at least partly comparable going forward, and for keeping this de-facto standard accessible to all types of devices (even tiny ones). However, it is not adequate to really challenge today’s huge chips built for data center inference, nor to show off what they can do.

Industry benchmarks

Aside from the de facto standard, there are of course various organisations developing benchmarks for AI accelerators (see: MLPerf, DawnBench, EEMBC, and others).

While MLPerf has published inference results, Tate’s view is that this benchmark is too data center-oriented. He argues that this is exemplified by the following: in the single-stream scenario, which considers an edge device processing one image at a time (batch=1), the performance metric is 90th percentile latency.

“At the edge, I don’t think customers want to know the 90th percentile, they want to know the 100th percentile. They want to know: what can you guarantee me?” Tate said, citing autonomous driving as an edge application where latency is critical.

Long tail latencies are a classic problem for systems that suffer from bus contention as information is transferred between many processor cores and the memory. While many of today’s devices use high bandwidth memory interfaces there is still a theoretical tail to the latencies while contention is possible.

Flex Logix’ FPGA-based inference processor design has the exact same latency every time (something also claimed by Groq, though they are adamant their device is not an FPGA).

“Since we’re using the FPGA interconnect my co-founder invented at the core, there is a totally dedicated path from the memory through the multiply accumulators, to the logic for activation, and back to memory. So there’s no contention: things just flow. We don’t get a hundred percent utilization, but we get much higher utilization than all the other architectures,” said Tate.

The Market

Regarding the explosion in numbers of chip startups in this sector, Tate is sanguine about Flex Logix’ prospects.

“When the chips come in and the software is run and they show the demos, and when you see the price and the power… very quickly, [companies] who aren’t in the upper quartile will disappear,” he said.

Tate’s prediction is that this sector can support 10 or 15 chip offerings, based on the different market segments (training, inference, data center, edge, ultra-low power, etc.). Offerings available today span multiple orders of magnitude in terms of compute power, so they don’t all compete directly with each other.

“There will be a giant culling of the herd in the next year or two,” says Tate, referring to the famous quote from Warren Buffett: “When the tide goes out, you can see who’s been swimming naked.”